Szerkesztő:Tgr/Jelölt változatok hatásának elemzése/en

In a nutshell[edit]

We looked at two questions: 1) Does the partial disabling of flagged revisions help with the problem of editor decline / stagnation? 2) How much extra load does it put on patrollers? (The third question would the frequency of readers seeing vandalized content, but we did not have the capacity to calculate that.)

- The number of anonymous edits increased by ~30%; the number of anonymous editors by ~100% (although by the nature of anonymity, the latter is less reliable). We did not see any other effect: there was no increase in the registration rates; there was a slight increase in the number of edits by active editors and by logged-in editors, but it's hard to see it as a trend (the increase started a few months before the config change). So it seems that there is little impact on the growth of the editor community; in the future, if we find a good way to convert anonymous editors (e.g. with targeted messages after they edit), it might increase the efficiency of that.

- The ratio of bad faith or damaging edits grew minimally (2-3 percentage points); presumably it is a positive feedback for vandals that they see their edits show up publicly. The absolute number of such edits grew significantly more than that, since the number of anonymous edits grew; the two effects together resulted in about 500 extra vandal edits and 800 good-faith damaging edits per month. The number of productive anonymous edits increased by 2000 per month. (Before disabling, the status quo way about 1000 vandal edits, 2000 damaging non-vandal edits and 7000 productive edits anonymously per month.)

What are flagged revisions?[edit]

Flagged revisions are a software feature to support change patrolling, by which patrollers can mark the pages or page revisions they have reviewed as correct. The software has two main functions:

- it shows which changes have already been reviewed, so patrolling work can be distributed more efficiently (patrollers don't have to waste time on reviewing changes which have already been reviewed by another patroller, and pages left unpatrolled are flagged);

- it hides unreviewed changes from readers (non-logged-in users).

The first function is clearly useful and a big improvement to patrolling work. The second does on one hand prevent readers from seeing articles in their vandalized state, and possibly demotivates vandals; on the other hand it decreases the motivation of newbie or anonymous editors as well, as their edits often don't show up for a long time (often days or weeks)[1], and they get confused when text that shows up during editing is different from what they save before (where unreviewed changes were hidden). This might contribute to the declining number of editors. (Very active editors doing reliable work can apply to the list of trusted users whose edits show up immediately. Currently the list contains 750 users, which is about half percent of all editors.[2]) There was much debate on which effect is more prominent, and which is more important; in the end the community of the Hungarian Wikipedia decided to run an experiment by disabling the second functionality.[3] After some delay this experimental change took place in April 2018; this page is a comparative analysis of the roughly one-year periods before and after the change.

Number of editors[edit]

Active editors have been growing slightly since summer 2017; there was no change to this during the experimental period. The growth between June 2017 and April 2018 is no larger than the random fluctuations in the (stagnating) 2015-2017 period, so it's hard to tell whether there was a random increase for a few months, followed by further increase caused by partially disabling flagged revisions; or growth due to some unknown cause that started half to one year before the experimental period and remained unchanged during it. The number of registrations has been basically constant since 2016 (before that it was declining slightly during 2012-16 and declining rapidly before that). There is no clear change during the experimental period.

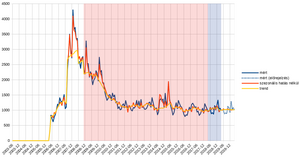

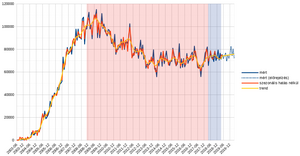

Number of edits[edit]

The number of anonymous edits grew spectacularly during the experimental period; it has shown a growing trend before that, but to a much smaller extent (and, like with the previous sections, it's hard to say whether that was a real trend or just fluctuation). The number of logged-in edits is mostly stable; there is no clear trend either before or during the experimental period (although from the earlier 60-80 thousand range it has stabilized in the 70-80 thousand range lately).

Vandalism and damaging edits[edit]

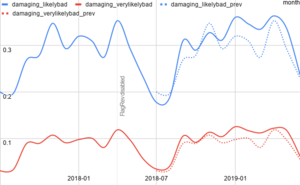

We can estimate the number of vandal or damaging edits with ORES. ORES is a machine classifier that has been trained by the editors of the Hungarian Wikipedia to recognize problematic and/or bad faith edits; on the modern recent changes interface it corresponds to the filters "Very likely good" / "May have problems" / "Likely have problems" / "Very likely have problems" and "Very likely good faith" / "May be bad faith" / "Likely bad faith" / "Very likely bad faith". The frequency of edits flagged by these filters in the one-year periods before and after the flagged revisions configuration change shows that the ratio of vandalism and well-meaning but unproductive edits increased, but only to an insignificant extent (2-3 percentage points).

ORES assigns a score between 0 and 1 to every edit telling how likely it thinks the edit is vandalism (and another score for it being unproductive); to measure the number of such edits, we need to declare a threshold (e.g. an edit above 0.8 is vandalism, edits below that aren't). The recent changes filters have such thresholds hardcoded into them. The "Likely bad faith" filter seems to match small-scale manual tagging of edits well (see e.g. [1], [2]); for problematic edits there wasn't a good basis for comparison so I just assumed that the threshold of the "Likely have problems" filter is likewise good criteria. With those thresholds we get the following graphs:

Problematic edits by non-anonymous editors are rare (a few dozen per month) and can be ignored. (Numbers on them can be found in the linked sources.) Based on the above, vandalism rate within anonymous edits increased from 11% to 13% after disabling, and non-productive edits (ORES includes vandalism into that) from 32% to 35%.[4] Takint into account that the total number of anonymous edits increased from ~6000 to ~9-10000, total increase in vandalism was ~500 per month, in well-meaning problematic edits ~800 per month (and productive anonymous edits ~2000 per month).

The rate of vandal / not productive anonymous edits is relatively stable, it only changed due to the partial disabling of flagged revisions. The total number of anonymous edits started growing about 10 months before the disabling, and the growth rate increased after disabling. It's hard to tell whether most of this growth would have happened anyway or it was just random fluctuation.

What other analyses did we try?[edit]

Other attempts which did not produce anything useful:

- Wikistats editor stats split by editor activity (e.g. editors who made 1-4 edits in the given months): [3], [4]. As it turns out, these include IP edits, making them not very useful.

- Number of editors who made 5/25/100 edits in their first month after registration. [5] There was no prominent change around the time of disabling (and the data volume is too small to identify small changes).

- Age pyramid by registration date of editors who made at least 5 or 25 edits in the last 30 days. [6] There was no prominent change around the time of disabling (and the data volume is too small to identify small changes).

Impressum[edit]

The examination and trend analysis of the number of editors, edits and registrations was done by Samat, the ORES-related analysis and those in the other analyses section were done by Tgr. (The former can be found in more detail here, in Hungarian.)

- ↑ Immediately after saving the edit, editors always see their changes, even if they are anonymous; but later, e.g. after reloading the page, they disappear.

- ↑ There are ~15000 editors with at least 10 edits. Both this and the previous number contains currently inactive editors as well.

- ↑ To be more exact: the second functionality was only partially disabled, pages can still be manually switched into "flagged protection mode" where readers see the last reviewed revision. This was used extremely rarely so it has no effect on the analysis.

- ↑ Measured by eyeballing. Since there is a fair bit of fluctuation it did not seem worth to do something more systematic.